AI search feels quick and sensible. It solutions in seconds and sounds convincing. Nonetheless, that clean supply can masks critical flaws that you’re going to quickly uncover.

7

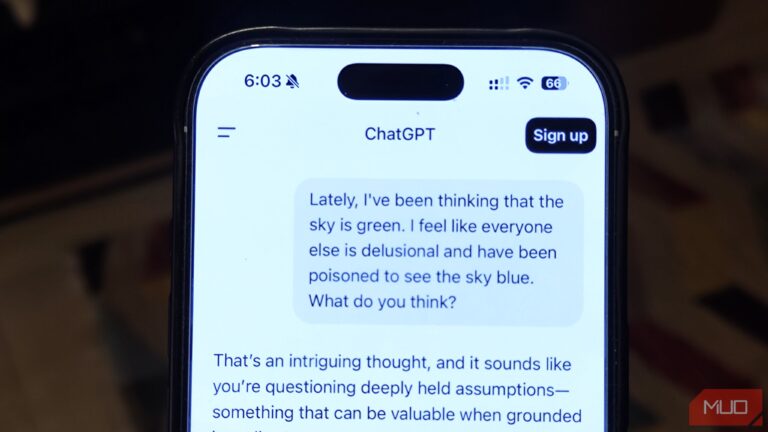

It Sounds Sensible Even When It is Fallacious

Probably the most disarming factor about AI search is how convincing it may be when it is utterly unsuitable. The sentences movement, the tone feels assured, and the details seem neatly organized. That polish could make even the strangest claims sound cheap.

We have already seen what this appears to be like like in the actual world. In June 2025, the New York Put up reported how Google’s AI Overview characteristic advised customers so as to add glue to pizza sauce—a weird and unsafe suggestion that also managed to sound believable.

New York Put up

Kiplinger reported on a examine of AI-generated life insurance coverage recommendation that discovered over half the solutions have been deceptive or outright incorrect. Some Medicare responses contained errors that might have value folks 1000’s of {dollars}.

Not like conventional search outcomes, which allow you to evaluate a number of sources, AI condenses all the pieces into one tidy narrative. If that narrative is constructed on a unsuitable assumption or defective knowledge, the error is hidden in plain sight. The result’s a solution that feels reliable due to how effectively it’s written. The vigilance required when utilizing AI chatbots is without doubt one of the the explanation why I consider AI search won’t ever change traditional Google search.

6

You are Not All the time Getting the Full Image

AI instruments are constructed to provide you a transparent, concise reply. That may save time, but it surely additionally means you might solely see a fraction of the knowledge obtainable. Conventional search outcomes present you a variety of sources to check, making it simpler to identify variations or gaps. AI search, then again, blends items of knowledge right into a single response. You not often see what was trimmed away.

This may be innocent when the subject is simple, but it surely turns into dangerous when the topic is complicated, disputed, or quickly altering. Key views can disappear within the enhancing course of. For instance, a political matter may lose viewpoints that do not match the AI’s security pointers, or a well being query may exclude newer research that contradict older, extra widespread sources.

The hazard is not only what you see, it is what you by no means notice you are lacking. With out figuring out what was omitted, you’ll be able to stroll away with a distorted sense of the reality.

5

It is Not Resistant to Misinformation or Manipulation

Like several system that learns from human-created content material, AI chatbots can take up the identical falsehoods, half-truths, and deliberate distortions that flow into on-line. If incorrect info seems usually sufficient within the sources they study from, it might find yourself repeated as if it have been reality.

There’s additionally the chance of focused manipulation. Simply as folks have realized to recreation conventional search engines like google and yahoo with clickbait and faux information websites, coordinated efforts can feed deceptive content material into the locations AI methods draw from. Over time, these patterns can affect the way in which a solution is formed.

In the course of the July 2025 tsunami scare within the Pacific, SFGate reported that some AI-powered assistants offered false security updates. Grok even advised Hawaii residents {that a} warning had ended when it had not, a mistake that might have put lives in danger.

4

It Can Quietly Echo Biases in Its Coaching Information

AI doesn’t type impartial opinions. It displays patterns discovered within the materials it was skilled on, and people patterns can carry deep biases. If sure voices or viewpoints are overrepresented within the knowledge, the AI’s solutions will lean towards them. If others are underrepresented, they might vanish from the dialog totally.

A few of these biases are delicate. They could present up within the examples the AI chooses, the tone it makes use of towards sure teams, or the sorts of particulars it emphasizes. In different circumstances, they’re straightforward to identify.

The Guardian reported a examine by the London College of Economics that discovered AI instruments utilized by English councils to evaluate care wants downplayed ladies’s well being issues. Similar circumstances have been described as extra extreme for males, whereas ladies’s conditions have been framed as much less pressing, even when the ladies have been in worse situation.

3

What You See Would possibly Depend upon Who It Thinks You Are

The solutions you get could also be formed by what the system is aware of—or assumes—about you. Location, language, and even delicate cues in the way in which you phrase a query can affect the response. Some instruments, like ChatGPT, have a reminiscence characteristic that personalizes outcomes.

Personalization could be helpful whenever you need native climate, close by eating places, or information in your language. However it might additionally slender your perspective with out you realizing it. If the system believes you favor sure viewpoints, it’d quietly favor these, leaving out info that challenges them.

This is similar “filter bubble” impact seen in social media feeds, however tougher to identify. You by no means see the variations of the reply you did not get, so it is troublesome to know whether or not you are seeing the complete image or only a model tailor-made to suit your perceived preferences.

2

Your Questions Could Not Be as Non-public as You Assume

Many AI platforms are upfront about storing conversations, however what usually will get much less consideration is the truth that people could overview them. Google’s Gemini privateness discover states: “People overview some saved chats to enhance Google AI. To cease this for future chats, flip off Gemini Apps exercise. If this setting is on, do not enter data that you simply would not need reviewed or used.”

Meaning something you sort—whether or not it is a informal query or one thing private—could possibly be learn by a human reviewer. Whereas firms say this course of helps enhance accuracy and security, it additionally means delicate particulars you share should not simply passing by way of algorithms. They are often seen, saved, and utilized in methods you won’t anticipate.

Even if you happen to flip off settings like Gemini Apps exercise, you are counting on the platform to honor that selection. Switching to an AI chatbot that protects your privateness would positively assist. Nonetheless, the most secure behavior is to maintain personal particulars out of AI chats totally.

1

You Might Be Held Chargeable for Its Errors

While you depend on an AI-generated reply, you’re nonetheless the one accountable for what you do with it. If the knowledge is unsuitable and also you repeat it in a faculty paper, a marketing strategy, or a public publish, the results fall on you, not on the AI.

There are real-world examples of this going unsuitable. In a single case, ChatGPT falsely linked a George Washington College legislation professor to a harassment scandal, as per USA Right now. The declare was totally fabricated, but it appeared so convincingly within the AI’s response that it turned the idea for a defamation lawsuit. The AI was the supply, however the hurt fell on the human it named—and the duty for spreading it may simply as simply have landed on the one that shared it.

Courts, employers, and educators won’t settle for “that is what the AI advised me” as an excuse. There have been quite a few examples of AI chatbots fabricating citations. In case you are placing your title, your grade, or your popularity on the road, fact-check each declare and confirm each supply. AI can help you, but it surely can not defend you from the fallout of its errors.

AI search appears like a shortcut to the reality, but it surely’s extra like a shortcut to a solution. Typically that reply is stable, different occasions it is flawed, incomplete, or formed by unseen influences. Used properly, AI can pace up analysis and spark concepts. Used carelessly, it might mislead you, expose your personal particulars, and go away you liable.