Whereas tech corporations are pushing their newest AI instruments onto customers at each flip, and selling the advantages of AI use, shoppers stay cautious of the impacts of such instruments, and the way helpful they’ll truly be in the long run.

That’s based mostly on the most recent knowledge from Pew Analysis, which performed a sequence of surveys to glean extra perception into how individuals around the globe view AI, and the regulation of AI instruments to make sure security.

And as you may see on this chart, considerations about AI are notably excessive in some areas:

As per Pew:

“Issues about AI are particularly widespread in the US, Italy, Australia, Brazil and Greece, the place about half of adults say they’re extra involved than excited. However as few as 16% in South Korea are primarily involved concerning the prospect of AI of their lives.”

In some methods, the info might be indicative of AI adoption in every area, with the areas which have deployed AI instruments at a broader scale seeing greater ranges of concern.

Which is smart. Increasingly studies recommend that AI’s going to take our jobs, whereas research have additionally raised important concern concerning the impacts of AI instruments on social interplay. And associated: The rise of AI bots for romantic functions is also problematic, with even teen customers participating in romantic-like relationships with digital entities.

Basically, we don’t know what the impacts of elevated reliance on AI can be, and over time, extra alarms are being raised, which increase a lot additional than simply the adjustments to the skilled surroundings.

The reply to this, then, is efficient regulation, and making certain that AI instruments can’t be misused in dangerous methods. Which can be tough, as a result of we don’t have sufficient knowledge to go on to know what these impacts can be, and other people in some areas appear more and more skeptical that their elected representatives will be capable of assess such.

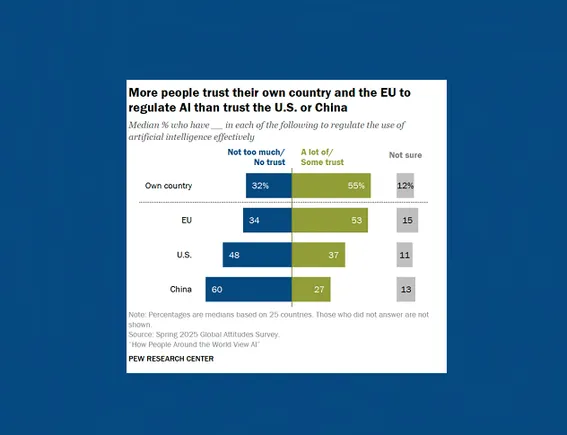

As you may see on this chart, whereas individuals in most areas belief of their coverage makers to handle potential AI considerations, these within the U.S. and China, the 2 nations main the AI race, are seeing decrease ranges of belief of their capability to handle such.

That’s possible because of the push for innovation over security, with each areas considerations that the opposite will take the lead on this rising tech in the event that they implement too many restrictions.

But, on the identical time, permitting so many AI instruments to be publicly launched goes to exacerbate such considerations, which additionally expands to copyright abuses, IP theft, misrepresentation, and so on.

There’s a complete vary of issues that come up with each superior AI mannequin, and given the relative lack of motion on social media until its adverse impacts have been already effectively embedded, it’s not shocking that lots of people are involved that regulators are usually not doing sufficient to maintain individuals protected.

However the AI shift is coming, which is very prevalent on this demographic consciousness chart:

Younger persons are much more conscious of AI, and the capability of those instruments, and lots of of them have already adopted AI into their each day processes, in a rising vary of the way.

That implies that AI instruments are solely going to change into extra prevalent, and it does really feel like the speed of acceleration with out sufficient guardrails goes to change into an issue, whether or not we prefer it or not.

However with tech corporations investing billions in AI tech, and governments trying to take away purple tape to maximise innovation, there’s seemingly not lots we will do to keep away from these impacts.